Assignment Makeovers in the AI Age: Essay Edition

This post is getting some traction, so I wanted to let readers know that I run a newsletter and podcast called Intentional Teaching. I explore topics like AI and teaching regularly in those venues, so subscribe today!

Last week, I explored some ways an instructor might want to (or need to) redesign a reading response assignment for the fall, given the many AI text generation tools now available to students. This week, I want to continue that thread with another assignment makeover. Reading response assignments were just the warm up; now we’re tackling the essay assignment.

Last week, I explored some ways an instructor might want to (or need to) redesign a reading response assignment for the fall, given the many AI text generation tools now available to students. This week, I want to continue that thread with another assignment makeover. Reading response assignments were just the warm up; now we’re tackling the essay assignment.

Here’s an expository essay assignment I gave the students in my 2012 first-year writing course on the history and mathematics of cryptography. It’s an older assignment, but I think it’s representative of a lot of essay assignments out there. Also, I converted this into a podcast assignment shortly thereafter, but making over a podcast assignment will have to wait for a future blog post. Here’s an excerpt from the assignment to give you a sense of it:

In this paper, you’ll describe the origin, use, influence, and mechanics of a code or cipher of your choice. There are many codes and ciphers we won’t have time to explore in this course. This assignment will provide you a chance to explore a piece of the history of cryptography that interests you personally and to share that exploration with an authentic audience. Your “paper” will take the form of a blog post between 1,000 and 1,500 words that will be submitted for publication on the blog Wonders & Marvels, a history blog edited by Vanderbilt prof Holly Tucker.

You’ll need to adopt a writing voice appropriate to Wonders & Marvels, interest your audience in your chosen code or cipher, and explain the mechanics of your code or cipher (enciphering, deciphering, decryption) in ways your audience can understand. The technical side of your post is the closest you’ll come to the kind of writing that mathematicians do, so be sure to be clear, precise, and concise. This assignment will require some research on your part, and you’ll need to cite your sources in your blog post.

This was a fairly innovative assignment for 2012 in that it presented students with an opportunity to write for an authentic audience external to the course. Here’s a bit of background on this part of the assignment, if you’re interested.

As I’ve been thinking about making over assignments in this new age of AI, I’ve been using a set of six questions to help me think through this task:

- Why does this assignment make sense for this course?

- What are specific learning objectives for this assignment?

- How might students use AI tools while working on this assignment?

- How might AI undercut the goals of this assignment? How could you mitigate this?

- How might AI enhance the assignment? Where would students need help figuring that out?

- Focus on the process. How could you make the assignment more meaningful for students or support them more in the work?

Let’s work through those questions with this old expository essay assignment.

Why does this assignment make sense for this course?

The course is a first-year writing seminar and, as such, is meant to prepare students for the kinds of writing they will be expected to do in college. This assignment was one of three big writing assignments, and it focuses on two kinds of writing: technical writing (explaining mathematical ideas in a way the audience can understand) and storytelling (hooking that audience with some kind of narrative structure). Both of these are useful for the kinds of research papers my students would likely be assigned in future courses.

Another role this assignment plays in the course is one of motivation. By asking students to select a topic of interest, I’m giving them a chance to find some intrinsic value in the assignment and the course more generally. The opportunity to write for an authentic audience also plays a motivational role, as I’ve found through these kinds of assignments.

What are specific learning objectives for this assignment?

Drilling down into what the assignment calls for, there are objectives around technical writing, like getting a sense of the mathematical background of the audience and then crafting explanations that work for that audience. There are storytelling objectives, like engaging readers through good openings and building good narrative structures. And there are more general research and writing skills, like selecting topics, finding and evaluating credible sources, and drafting and revising those drafts.

How might students use AI tools while working on this assignment?

How might students use AI tools while working on this assignment?

Here’s where we need to experiment with the currently available AI tools. Let’s go with the obvious first test and see if ChatGPT (version 4, the paid version) can write a decent essay using the assignment prompt itself, slightly modified. One irony here: In an effort toward transparent teaching, my assignment descriptions are very clear about my expectations for student work, which makes it pretty easy to cut-and-paste those descriptions into ChatGPT to shortcut that work. Sigh.

You can read ChatGPT’s entire essay, but here’s the first part of it:

This essay has some problems. (1) It’s too short, clocking in around 750 words. I hear that’s ChatGPT’s output limit, so maybe that’s understandable, but the assignment calls for at least 1000 words. (2) The essay is all by-the-numbers, walking through the origin, use, and so on of the cipher machine just like I asked it. There’s no attempt to hook the reader or do any storytelling. (3) Its references are dodgy, as we’ve come to expect from ChatGPT. For instance, it references a piece by Kozaczuk, but gets the date wrong, leaves off a co-author, and totally mangles the publication venue. (4) It picked a bad topic! This assignment asks students to select a code or cipher not well covered in the course, but we spend two weeks on the Enigma machine!

I’m sure the essay has other problems, but I decided four was enough. I pulled out the rubric for this assignment and scored ChatGPT’s work. It got a low D, losing a chunk of points for poor topic selection alone.

That’s maybe reassuring, but if we really want to kick the tires on this, we need to think like a student making creative use of AI tools. Suppose our hypothetical student realizes that the Enigma machine is a poor choice. They could ask ChatGPT for other options. I did that, and ChatGPT listed nine more codes and ciphers from history. Of those nine, seven were ones we cover in the course but two of them (the Zodiac Killer’s ciphers and the Voynich manuscript) we don’t, and either one of those topics would work well for this assignment. Just to be sure, I asked ChatGPT for more codes and ciphers, and it gave me nine more, this time identifying four good paper topics, including another World War Two cipher machine, the Purple cipher.

Our hypothetical student might then ask ChatGPT to write an essay about the Purple cipher, using the same prompt as before but specifying the topic. I tried that, and the results were similar; the resulting essay had the same kinds of problems as the first one. The better topic might pull the essay up to a high D, but we’re still a long way from a decent grade for the AI.

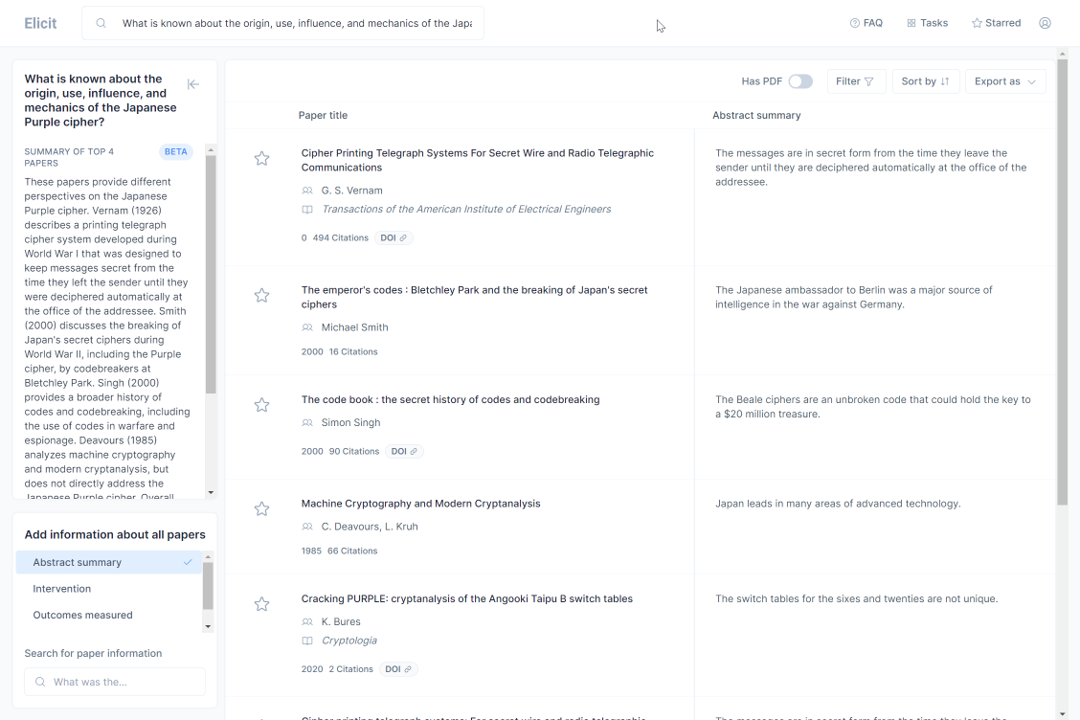

However, we’re not done. What if our student wanted to write a decent paper or maybe revise ChatGPT’s effort in significant ways? They might want to track down some credible sources to help them do so. I turned first to Elicit, an AI tool that searches Semantic Scholar’s database of scholarly articles, and I asked the tool, “What is known about the origin, use, influence, and mechanics of the Japanese Purple cipher?” Elicit returned a list of scholarly sources with some AI-generated summaries of those sources:

I checked out a few of these, either using Elicit’s summary or pulling up the source itself. A couple of them are both credible and potentially relevant. Our hypothetical student would need to do a little work to sift through Elicit’s recommendations, but they would come away with at least a couple of useable references.

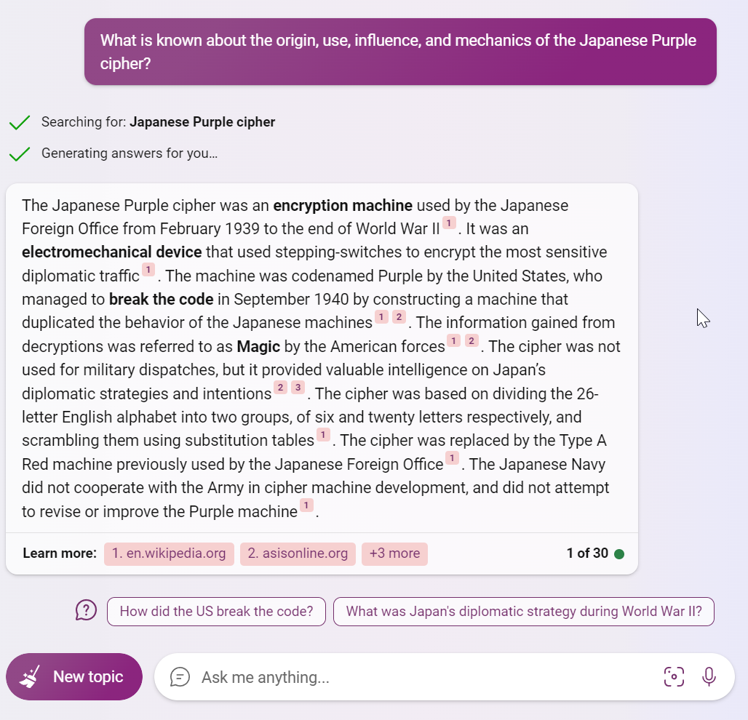

Next, I turned to Bing, which has a chat feature powered by AI. It can search the web, so might it return additional useful sources? No, not really:

Bing not only tries to answer the given question coherently, it also cites its sources. One is Wikipedia, sure, fine. Two others are what I would call potentially dodgy. Both are short pieces on the Purple machine posted on the websites of business or organizations. Both would require some investigation re: their credibility, but neither piece has much to say to begin with. One of Bing’s other sources? An essay one of my 2012 students wrote about the Purple machine for this very assignment! His essay was published on Wonders & Marvels, my colleague’s blog on the history of science and medicine, and then picked up by Gizmodo for a much wider audience.

However, Bing offered one thing I found very interesting. After it attempted to tell me about the Purple machine, it offered me other questions I might ask:

- How did the US break the code?

- What was Japan’s diplomatic strategy during World War II?

- What was the impact of breaking Purple?

- How did the Japanese react to the breaking of Purple?

For an enterprising student who wants to write a good paper about the Purple machine, these are very useful questions to investigate!

I could keep experimenting with this all day, but let’s wrap this up with one more test. What if I went back to ChatGPT and asked it a different prompt about the Purple machine that might generate a better essay? I tried a much simpler prompt: “Tell me an exciting story about the breaking of the Japanese Purple cipher.” You can read ChatGPT’s response (scroll to the bottom). Here’s the first part:

That’s pretty decent! It’s certainly more interesting to read than the first by-the-numbers version. There are no sources cited here and the essay is still too short, but our hypothetical student could probably turn it in for a C grade. Or they might use this new draft, along with the earlier version and what they’ve learned about the Purple cipher from their new sources, to write a B paper or better.

Okay, back to our assignment makeover questions…

How might AI undercut the goals of this assignment? How could you mitigate this?

ChatGPT is pretty good at technical writing. You can even ask it to simplify (or complexify) its explanations, so this goal of the assignment is one where AI tools could really be a crutch for students. And as that essay on the Purple machine shows, ChatGPT is pretty good at storytelling, too. My more general writing goals (drafting, receiving feedback, revising) are in trouble, too, since these AI tools are such good wordsmiths.

Writing for a public audience might mitigate these temptations, since there’s more accountability for students to own their own words. But it might also increase the pressure students feel to write something polished, leading some students to lean on the AI tools. Letting students pick their own topics will help, since students who have some intrinsic motivation to learn about the topic are more likely to do the hard work. But for students who want an easy way to complete the assignment, AI certainly provides that.

How might AI enhance the assignment? Where would students need help figuring that out?

I think the win here is that using AI tools like Elicit and Bing provides a great opportunity to talk with students about how we find sources and evaluate their credibility. ChatGPT’s unsourced story about the Purple machine can be useful, too, since it’s an opportunity to talk about why we need credible sources. Why should I trust what ChatGPT writes to begin with?

I’m not sure if AI tools are more useful for having these conversations with students than other tools like Google Search or Wikipedia, but they are of the moment, so this fall semester we should lean into these conversations with students.

Focus on the process. How could you make the assignment more meaningful for students or support them more in the work?

At the end of last week’s assignment makeover post, I quoted Anna Mills’ March 2023 Chronicle piece, where she advocates for instructors to focus on the process of writing. I keep coming back to that piece because her advice is so good. How might I make this essay assignment more meaningful to students? Or communicate to them the value of learning to write in these ways? Or support my students in their work during this assignment?

Short of dramatically revising this essay assignment (which is something I might tackle in a future blog post, since it later turned into a podcast assignment), I think focusing on the process is my best option for revising this assignment. I can imagine pairing students up to share drafts of their essays and provide each other feedback as an in-class activity. Even if the student’s draft is AI-generated, the feedback they would need to receive and process from their peer would still (hopefully) push them to think about technical communication and storytelling. I would probably want each student to identify for me three important changes they’ll make to their drafts based on the peer feedback. Even if they ask ChatGPT to make those changes, identifying the changes to make is an important step.

I would definitely share ChatGPT’s first draft with my students during class and have the students use the assignment rubric to evaluate it. I’ve often had a student volunteer to share a draft of their essay for peer evaluation using the rubric (and a classroom response system), but ChatGPT’s draft would be a better choice. None of the students would feel the need to hold back on harsh critiques out of fear of hurting the writer’s feelings! And this activity would lead to a very useful discussion about writing and my expectations for the assignment.

Now what?

That said, looking over my responses to the last three questions, I come to one very difficult question I need to answer about this assignment: Am I going to teach students to write or to write with AI tools like ChatGPT? Because the tools I’ve mentioned here are, in fact, useful for writing this kind of essay and most of them are, I think, useful for learning to write in these modes (technical writing, storytelling). It’s not practical to ban AI text generation tools (they’ll soon show up in Google Docs and Microsoft Word, among other places), but I could probably tell my students we’re going to run this assignment once without AI and once with AI. That might be a useful process.

If this were, say, a journalism class full of journalism majors and if the field of journalism had well defined standards and practices around the use of AI tools, then I could use those standards and practices to guide the use of AI by my students. I could easily say to them, “We’re going to use AI for these purposes but not for these other purposes, because that’s what you’ll be expected to do as a journalist.”

However, (a) the field of journalism hasn’t developed those standards and practices and (b) this is a first-year writing seminar designed to prepare students for future academic writing. And academia certainly hasn’t figured out its standards and practices regarding AI tools, either! If I were teaching this course this fall, it’s likely my students would have professors in the spring who would ban the use of tools like ChatGPT, which makes it difficult for me to lean into the tools for this course.

I don’t have a great answer to this big question, so I’ll leave you with another metaphor. I argued in an earlier blog post that learning to write with AI tools might be like learning photography with a DSLR camera, where the conceptual learning (light, motion, composition) is enhanced by the technical learning (figuring out what all those knobs and buttons on the camera do). Several years ago, iPhone cameras got good enough that someone who doesn’t know much about photography could use an iPhone camera in auto mode to take a picture that was as good as what I could do with my photography skills and a DSLR camera, at least in many circumstances. There are still circumstances where my camera and my photography skills still shine, like some low-light conditions or sports photography or birdwatching with a telephoto lens.

Might AI tools end up working similarly? For many writing circumstances (say, an email to the campus about parking logistics for the football game), a few prompts to the chatbot will produce something as good as what an expert can write, but experts will still be needed for more challenging writing projects (e.g. providing a struggling student with academic and career advice). Betsy Barre made a similar argument in a recent episode of the Tea for Teaching podcast. And we do find ourselves in that world, what does that mean for my first-year writing seminar?