On ChatGPT, Boilerplate, and Ghost Writing

Last week, the Office of Equity, Diversity, and Inclusion at Vanderbilt University’s Peabody College sent an email to students in response to the recent mass shooting at Michigan State University. These emails offering condolences and support and links to resources are all too common in higher education today and they often rely on boilerplate language. What’s not common is the use of ChatGPT, the AI text generator from OpenAI, to write such emails, but that’s what the Peabody administrators did. As reported in the Vanderbilt Hustler, the email included a parenthetical before the signature reading, “Paraphrase from OpenAI’s ChatGPT AI language model, personal communication, February 15, 2023.”

This did not land well. Here are some of the student reactions reported in the Hustler:

- “Disgusting… Deans, provosts, and the chancellor: Do more. Do anything. Lead us into a better future with genuine, human empathy, not a robot.”

- “It’s hard to take a message seriously when I know that the sender didn’t even take the time to put their genuine thoughts and feelings into words. In times of tragedies such as this, we need more, not less humanity.”

- “Automating messages on grief and crisis is the most on-the-nose, explicit recognition that we as students are more customers than a community to the Vanderbilt administration.”

The office sent a follow-up email with an apology, noting that the use of ChatGPT was “poor judgment.” Two administrators in that office have now “stepped back” from their positions as a result of the controversy.

What should we make of this? Let’s see… Someone uses ChatGPT to generate text for a writing task, and then the audience for that writing reacts negatively because they consider the use of an AI text generator to be wildly inappropriate for that kind of writing task. Sound familiar? This is very much how some faculty responded to student use of ChatGPT for writing assignments back in December and January. Many faculty and, indeed, entire universities, moved to ban the use of ChatGPT on assignments.

I suspect that administrators at Vanderbilt and elsewhere now see ChatGPT as essentially banned by students for use in administrative communications. Or rather, I suspect administrators will avoid using ChatGPT for certain kinds of communications. As Matt Reed points out in his Inside Higher Ed column this week, the use of boilerplate text or even ChatGPT output for, say, an email about parking logistics probably wouldn’t generate the same kind of student outcry. Students don’t expect that kind of email to be personal in the same way an email about a mass shooting might be.

I suspect that administrators at Vanderbilt and elsewhere now see ChatGPT as essentially banned by students for use in administrative communications. Or rather, I suspect administrators will avoid using ChatGPT for certain kinds of communications. As Matt Reed points out in his Inside Higher Ed column this week, the use of boilerplate text or even ChatGPT output for, say, an email about parking logistics probably wouldn’t generate the same kind of student outcry. Students don’t expect that kind of email to be personal in the same way an email about a mass shooting might be.

The problem for faculty, administrators, and students is that we don’t yet have established norms and expectations for the use of AI generated text in higher education. Consider that the Vanderbilt email cited its use of ChatGPT. That email was sent out by an administrator who is also a faculty member, and it’s pretty easy for me to imagine that she felt she was modeling best practices by citing her use of ChatGPT in the email. That’s what many faculty are recommending to their students this semester, particularly the ones who are exploring the use of these tools as writing assistants.

Which norm is “right”? The one that says it’s okay to use ChatGPT to help you write, as long as you acknowledge your use of the tool? Or the one that says for certain kinds of writing, the use of ChatGPT is wildly inappropriate? As a community, we haven’t figured that out yet, and I worry about imposing consequences on administrators or students when we don’t have established norms and expectations yet.

Here’s a sign that the norms haven’t been well established: One of the students quoted in that Hustler article said “that using ChatGPT is presumed to be cheating in academic settings.” That’s not actually true. Some faculty might presume that, and some faculty might have communicated that to the student. But the way the Vanderbilt Honor Code works, it’s up to individual instructors to determine for students what counts as unauthorized aid in their individual courses. And I know there are faculty at Vanderbilt who are exploring the use of ChatGPT in writing with their students, without presuming its use is cheating. The student is wrong in their assumption, but can you fault them for that given the heated discourse about ChatGPT over the last three months?

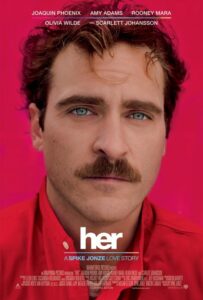

This week I decided it was finally time to watch the 2013 sci-fi movie Her directed by Spike Jones and starring Joaquin Phoenix. The film is about a man who develops a romantic relationship with his artificially intelligent personal assistant, voiced by actress Scarlett Johansson. Without spoiling the movie, I’ll note that the lead character works at a business where people ghost-write handwritten letters for customers, often very personal letters and often working with the same clients for years.

This week I decided it was finally time to watch the 2013 sci-fi movie Her directed by Spike Jones and starring Joaquin Phoenix. The film is about a man who develops a romantic relationship with his artificially intelligent personal assistant, voiced by actress Scarlett Johansson. Without spoiling the movie, I’ll note that the lead character works at a business where people ghost-write handwritten letters for customers, often very personal letters and often working with the same clients for years.

Given the reaction last week to the Vanderbilt email, it struck me as odd that in the future world of Her it’s socially acceptable to hire other humans to ghost-write letters to loved ones. Surely that’s a task that we wouldn’t outsource, right? On the other hand, maybe Her takes place in a world where the use of AIs to write such letters is frowned upon, but having other humans write such letters is fine. That doesn’t make much logical sense to me, since it’s the ghost-writing that strikes me as impersonal, not the ghost writer, but I can also imagine the kinds of super-heated discourse we’re seeing in the real world about ChatGPT leading us to illogical places in how we think about these tools.

When will it be acceptable to have some writing assistance from a tool like ChatGPT? Emails about tragedies, maybe not. Emails about parking logistics, maybe so. Might there be some kinds of writing assignments in the classes we teach where ChatGPT is disallowed, but others where its use is allowed or even encouraged? My hope is that as norms around these tools are developed in higher education that we’ll take our time and figure out these questions thoughtfully together.