Learning Analytics, Program Assessment, and the Scholarship of Teaching #EDUSprint

A few years ago my university was up for re-accreditation. Our accrediting body wanted every undergraduate major and professional program to submit a learning outcomes assessment plan. This meant that every department on campus needed to identify broad learning outcomes for its majors and programs, along with ways to assess those program-level outcomes. I sat in on a workshop for chairs intended to orient them to this requirement. When discussing assessment options, the workshop facilitator drew a distinction between direct and indirect measures of student learning. He shared a few examples of indirect measures:

A few years ago my university was up for re-accreditation. Our accrediting body wanted every undergraduate major and professional program to submit a learning outcomes assessment plan. This meant that every department on campus needed to identify broad learning outcomes for its majors and programs, along with ways to assess those program-level outcomes. I sat in on a workshop for chairs intended to orient them to this requirement. When discussing assessment options, the workshop facilitator drew a distinction between direct and indirect measures of student learning. He shared a few examples of indirect measures:

- Responses to survey or interview questions asking students to rate their satisfaction with a learning experience – Satisfaction isn’t learning, so, yeah, this sounds indirect.

- Responses to survey or interview questions asking students to assess their own learning – Okay, sure, students might not accurately gauge their own learning, so this is indirect.

- Reflections by instructors on student learning and teaching methodologies – This one hurts a little, but I’ll grant that instructor self-reflection isn’t a direct measure of learning.

- End-of-course grades – Wait. What?

That last one generated some discussion. What could be a more direct measure of student learning than course grades? Isn’t that the entire purpose of course grades, to evaluate student learning? From the perspective of our accrediting body, not so much.

Here’s the argument for course grades as indirect, not direct, measures of student learning: Course grades are synthesized over the length of an entire course and often include non-learning measures like participation. Without detailed context and planning, it can be very difficult to match a student’s grade in a course to specific program-level learning outcomes. Knowing that a student receives an “A” in a course does not tell you what that student has learned.

What counts as a direct measure of student learning? Here are some examples:

- Student performance on standardized and locally developed exams

- Student work samples (essays, lab reports, quizzes, portfolios, etc.)

- Student reflections on their own values, attitudes, and beliefs

- Behavioral observations of students (in person, via video- or audiotape, etc.)

- Ratings of student skills by field experience supervisors

If you want to make the case to our accrediting body that your program is accomplishing its stated objectives, these are the kinds of evidence you’ll need to muster.

Given what I know about how course grades are calculated, I’m with the accrediting body on this one. If you want to understand what and how your students are learning within your program, direct measures of student learning are more useful than indirect ones.

It’s with this framework that I approach the topic of learning analytics. Learning analytics (LA) refers to “the use of data, statistical analysis, and explanatory and predictive models… to achieve greater success in student learning.” That definition is from a brief issued by the EDUCAUSE Learning Initiative (ELI) titled “Learning Analytics: Moving from Concept to Practice.” EDUCAUSE is hosting a three-day, online “sprint” on LA, featuring webinars, blog posts, forum discussions, and Twitter conversations (#EDUSprint). LA is a hot topic among campus information technology staff, and I’m glad to see EDUCAUSE organizing this event. (This blog post is helpfully tagged with #EDUSprint to add to the discussion.)

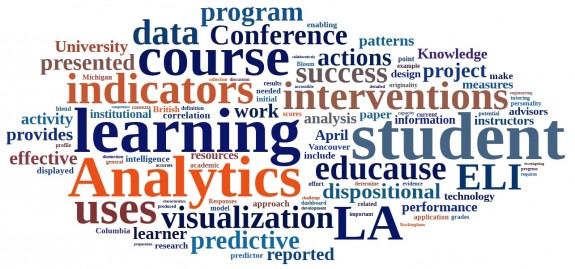

That said, the purposes and mechanisms of learning analytics described in that ELI brief seem limited to me, particularly in light of the distinction I see between direct and indirect measures of student learning. Check out the word cloud above, generated from the ELI brief. (Click on the image to see a larger version if you need to.) Three of the more frequently used words in the brief seem to me to sum up the current thinking on learning analytics: indicators, predictive, and interventions.

That said, the purposes and mechanisms of learning analytics described in that ELI brief seem limited to me, particularly in light of the distinction I see between direct and indirect measures of student learning. Check out the word cloud above, generated from the ELI brief. (Click on the image to see a larger version if you need to.) Three of the more frequently used words in the brief seem to me to sum up the current thinking on learning analytics: indicators, predictive, and interventions.

Indicators – A good chunk of the brief is devoted to describing the kinds of data that LA tools examine, including dispositional indicators (age, gender, high school GPA, current collect GPA, “learning power” profiles, personality types, and so on) and activity and performance indicators (number and frequently of course management system logins, amount of time spent on course websites, number of discussion forum posts, and so on). What strikes me about these indicators is that they are largely indirect measures of student learning. They say a lot about what students bring to the learning process and what they do during the learning process, but they don’t really such much about what students are learning or how they’re making sense of course material.

Predictive – What do LA tools do with all this data? Most of them, as described in the brief, strive to predict student performance (as measured by exam performance and/or course grades) based on these indicators. “A student’s GPA alone can be an accurate predictor of a student’s performance in a course.” “The model attempts to predict if students will pass their current courses.” “Also of interest are indicators the university has found to have little or no predictive value.” Using data to predict student performance is a great idea, but I think data can do much more than that, particularly when the context of the course is taken into account. What are the concepts in introductory statistics or comparative politics or earth materials that are hardest for students to understand? What kinds of connections among topics in an interdisciplinary course on sustainability do students make? In what ways do students’ writing skills improve (or not) between freshman and sophomore year? These are questions that, I think, learning analytics can help answer, providing more direct measures of learning are used.

Intervention – To what end do LA tools try to predict student performance in a course? Judging from the ELI brief (which may, of course, not be a comprehensive look at the field), most tools are geared toward providing early warning about students likely to do poorly in a course, warnings that allow instructional staff to intervene by providing these students with additional resources and support. This is a great use of data to benefit students, and I’m glad that very smart people are figuring out how to do this. However, the focus on informing interventions for individual students misses an opportunity to inform course-wide interventions. If we collect meaningful data on student learning and, instead of drilling down on individual students, we look for patterns across all the data collected, we just might find out a few things that could be used to improve the course for all students, not just those whose early warning signals are going off.

I’ll admit that I don’t track learning analytics as closely as I track other topics, like classroom response systems or social media in education. I know that there are at least a few others out there thinking about learning analytics along these lines. I heard a talk by David Wiley a year or two ago where he described using learning analytics to make sense of data collected from thousands of students enrolled in open education courses, all for the purpose of refining those courses to better meet student learning needs. And the ELI brief has a short section on the application of LA techniques to student artifacts such as essays, blog posts, and media productions. “This approach is far less common but has the potential to put LA ‘closer’ to the actual learning by detecting indications of competency and mastery.” That’s what I mean when I call for more direct measures of student learning. I’d like to see much more work in this area.

I’ll end this lengthy post by pointing out that there’s a community of faculty and staff who have been using direct measures of student learning to improve and refine courses and instruction for years: those who engage in the scholarship of teaching and learning (SoTL). If you want to know what kind of evidence of student learning faculty find useful for enhancing their teaching, talk to the faculty who are collecting such evidence. You can start by reading through this introduction to SoTL that I helped write and then maybe seeing what the International Society for the Scholarship of Teaching and Learning (ISSOTL) is up to.

My hope is that the learning analytics community will apply their technological and statistical skills to leveraging the kind of data that the SoTL community finds valuable, and both communities will learn a lot more about student learning and the teaching practices that promote it.

Image: “Cone and Shadow,” by me, Flickr (CC)