Prediction Questions, Simulations, and Times for Telling in #Math216

Last Friday was the first day of a unit on probability in my statistics course. In order to motivate this unit, the message I tried to send to students on Friday was the following: When it comes to estimating probabilities, our intuitions are often misleading, so we need mathematical models and tools to help us out. To prove that point, I asked my students a series of clicker questions about probabilities, the answers to which were intended to be surprising. For instance, here’s one I’ve used many times in the past:

Last Friday was the first day of a unit on probability in my statistics course. In order to motivate this unit, the message I tried to send to students on Friday was the following: When it comes to estimating probabilities, our intuitions are often misleading, so we need mathematical models and tools to help us out. To prove that point, I asked my students a series of clicker questions about probabilities, the answers to which were intended to be surprising. For instance, here’s one I’ve used many times in the past:

Your sister calls to say she’s having twins. Which of the following is more likely? (Assume she’s not having identical twins.)

- Twin boys

- Twin girls

- One boy and one girl

- All are equally likely.

I used the “classic” peer instruction approach with this question, and it worked exactly as planned. The results of the first vote, during which I asked students not to discuss the question with each other, were a split decision: 55% went for option 4 (incorrect) and 43% went for option 3 (correct). I showed the students these results, hoping they would motivate discussion, and asked them to talk the question over with a neighbor and vote again. On the second vote, there was some convergence to the correct answer, with 63% choosing option 3 and only 38% sticking with option 4.

That was a good sign, but I wanted to make sure students understood this question. I asked for a couple of students to explain their reasoning, and they were on target. There are four possible outcomes here: boy-boy, boy-girl, girl-boy, and girl-girl. Each of those is equally likely (assuming a 50-50 gender breakdown), but two of them are the same “event,” one boy and one girl. So there’s a 50% chance of one boy and one girl, a 25% chance of two boys, and a 25% chance of two girls.

Just in case students didn’t buy this argument, I had them conduct a simulation. I asked everyone to flip something–preferably a coin, but a clicker or student ID card would do. One side would represent a boy, the other a girl. Each student flipped twice, and I asked for a show of hands for each possible combination. I noted the number of each combination on the board, and then we repeated the simulation twice more. As expected, the percentage of flips that resulted in boy-girl combos was close to 50%.

I told students that’s is a common misconception to think that all the results of some random process are all equally likely to occur. That’s not always the case, however. Furthermore, if you take this idea to its extreme, you can get some absurd conclusions. Then I showed students part of this clip from The Daily Show, in which a high school teacher and several major news outlets reach such a conclusion:

My goal with this sequence of activities was to create a time for telling. That is, I wanted students to want to learn about probability because it was demonstrated to them (via simulation) that their existing understanding of probability was limited. And I wanted them to get ready to learn about probability by calling to mind what they already knew (or thought they knew) about probability, so they would be “warmed up” (cognitively) to make sense of new information about the subject.

Clicker questions that ask student to predict something work great for creating times for telling, particularly if many students predict incorrectly. This is a situation where the technology, not just the pedagogy, matters. By using clickers, I’m asking every student to commit to a prediction by pressing the corresponding button on their clicker. That’s a very small form of commitment, but without it, the students would not be as invested in the outcome. Moreover, clickers allow me to show the distribution of predictions on the big screen for all students to see. Why is this important? Because “lots of students got this wrong” creates more of a time for telling than “I got this wrong.”

The other clicker questions I used in class Friday worked similarly. Here’s one that deals with the base rate fallacy:

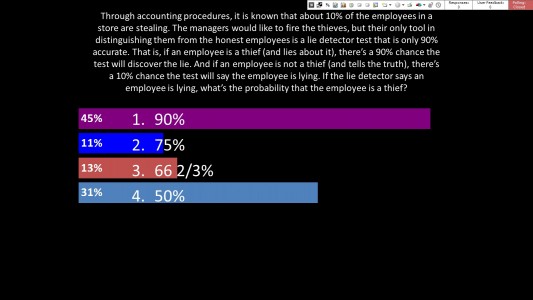

Through accounting procedures, it is known that about 10% of the employees in a store are stealing. The managers would like to fire the thieves, but their only tool in distinguishing them from the honest employees is a lie detector test that is only 90% accurate. That is, if an employee is a thief (and lies about it), there’s a 90% chance the test will discover the lie. And if an employee is not a thief (and tells the truth), there’s a 10% chance the test will say the employee is lying. If the lie detector says an employee is lying, what’s the probability that the employee is a thief?

- 90%

- 75%

- 66 2/3%

- 50%

In this case, there was a bit of convergence to the correct answer (option 4, from 16% on the first vote to 31% on the second), but I didn’t really expect students to get this right, since they hadn’t read about the calculation techniques needed to answer this question.

I did, however, want to convince them that the correct answer was closer to 50% than 90%, so I had them simulate this experiment, too. Since passing out 10-sided dice to 75 students would have been expensive, I used Excel to create a sheet with hundreds of random digits (0 to 9), passed out copies of this sheet, and had students select digits at random. On their first pick, a 0 meant the student was a thief and 1 to 9 meant they weren’t. On their second pick, a 0 meant the lie detector test gave an incorrect result and 1 to 9 meant it didn’t. I asked for a show of hands of those who were reported as thieves, and then found out how many of those students actually were thieves. After repeating this process three times, the percentage was somewhere south of 60%, definitely closer to 50% than 90%.

This time I didn’t try to justify the correct answer mathematically. That would come later, when we covered conditional probability. As with the first clicker question, the point here was to show the students that there was something about probability they didn’t understand, in order to motivate their interest in conditional probability when we got to that topic.

I’ll share more prediction questions from last Friday in a future blog post, and you can find ones used by other faculty in my book on teaching with clickers. In the meantime, I’m curious: Have you used prediction questions like these in your teaching?

Image: “IMG_9936e2,” Abby Bischoff, Flickr (CC)